Towards Markerless Grasp Capture

Samarth Brahmbhatt1, Charlie Kemp, and James Hays1,2

1Georgia Tech Robotics, 2Argo AI

Abstract

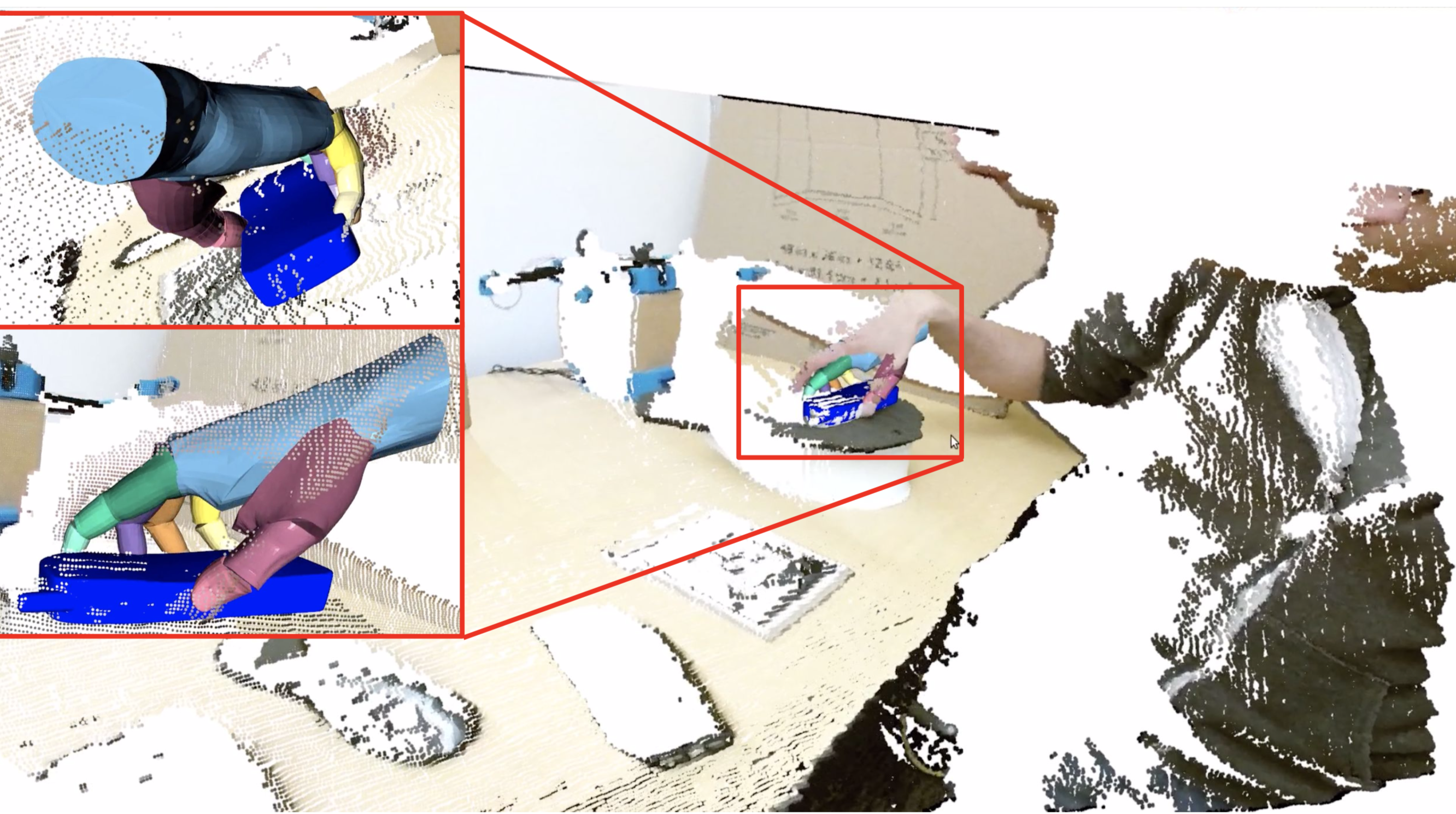

Humans excel at grasping objects and manipulating them. Capturing human grasps is important for understanding grasping behavior and reconstructing it realistically in Virtual Reality (VR). However, grasp capture – capturing the pose of a hand grasping an object, and orienting it w.r.t. the object – is difficult because of the complexity and diversity of the human hand, and occlusion. Reflective markers and magnetic trackers traditionally used to mitigate this difficulty introduce undesirable artifacts in images and can interfere with natural grasping behavior. We present preliminary work on a completely markerless algorithm for grasp capture from a video depicting a grasp. We show how recent advances in 2D hand pose estimation can be used with well-established optimization techniques. Uniquely, our algorithm can also capture hand-object contact in detail and integrate it in the grasp capture process.